Learn more

- Aug 25, 2008

The Gap between the Web 2.0 and Semantic Web Community (tentative post)

Two days ago in upper Austria, the BarCamp Traunsee, subtitled “Social Media Review Camp”, took place, which I had co-organized and which was co-sponsored by our own lil’ Semantic Web Company. Andreas Blumauer (also SWC) joined me on the first day, hosting a session about and giving an introduction to Linked Data. Given the angle of the BarCamp, he gave it to an audience of Web 2.0 people (i.e. consultants, marketers, developers, communications people). And was he able to bridge the gap between 2.0 and 3.0?

Half a year ago, I had been a complete newbie to the Semantic Web and Linked Data myself, and while the concept of the Semantic Web is undoubtedly as persuasive as a technological concept possibly can be, I remember how hard it was to come to grips with it (btw, I am a Humanities/Liberal Arts person). I think that Andreas’ presentation on Friday was probably the most accessible introduction to the topic I have witnessed this far, and it allowed me to backtrack once more where the biggest comprehension and communication issues probably are.

If Semantic Web people start explaining their concepts to ‘other species’, they very soon start juggling acronyms and technical lingo, in particular names and abbreviations from the Semantic Web Stack – understandably so, as URIs, XML and RDF form the very foundation, on the technological side. But the only concept where the web 2.0 people (in particular those who approach it from the business, PR or marketing side) might still be with them is XML – even though it might sound surprising, not everyone is able to guess without context that the term URI refers to the same kind of thing as URL. And when you say RDF, people are surprisingly often inclined to think you are talking about “RFID” (Radio Frequency Identification) – it’s got, after all, also to do with unique identification, doesn’t it?

Just as the Semantic Web interfaces are only about to become more accessible to web 2.0 people (once more, hooray for Parallax), I think a VITAL next step in promoting the Semantic Web is to find human-readable explanations of its technologies.

The generic explanations all sound very good ( “At the moment, we have a web of documents, but the Semantic Web aims for the web of data” or “The Semantic Web wants computers not only to be able to process, but also to understand data”), but what they fail to achieve is to make non-tech people interested in the (workings of the) technology.

Without addressing technology, these generic explanations are just too bland to convey what is really exciting about the semantic web – yet as soon as SemWeb people start to talk technology, the acronym shower starts – see above. Dilemma.

Back to the BarCamp: I think that Andreas took a good approach in that he

a) kept the acronym level low

b) went on to explain how Linked Data can be a better source for mashups than APIs – because APIs really are the Holy Grail of the Web 2.0 community. I saw it happen before and I saw it happen at the BarCamp Traunsee – as soon as a new tool or feature is introduced, people start asking: “Does it have an API?” – – “Will it have an API?” – “Can I get access to the API?” – “Is the API documentation online?”

What seems to be pegged in people’s mind is that you have to have an API to make mashups, and that mashups are what constitutes the miracle of the web 2.0. So my simple advise for all Semantic Web evangelists would be:

If you want to develop a showcase that people understand, develop a mash-up, and more specifically one that uses data that average users would use and understand.

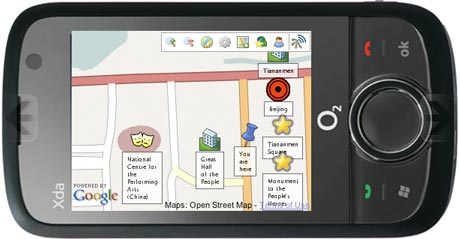

Develop something like DBpedia mobile (call up in emulator), and go into the details of the Semantic Web stack only after people have seen and understood that you don’t need an API (well, theoretically) and huge programming effort to obtain structured, processable data.

Btw, things got even more semantic on the second day of the BarCamp: Alexander Kirk presented his Factolex dictionary, a dictionary consisting of “short and concise explanations” which can be enhanced by tags, and which, because of their simplicity, would ideally lend themselves for a conversion into triples. Alexander confirmed that he keeps semantic integration in mind while developing Factolex further.

Alexander’s presentation was followed by input from Michael Schuster (who hasn’t yet put his session online, and I seem unable to remember the names of the sites he uses and showed us). One of them was a tool that uses natural langauge processing to interpret user notes, and which is able to decide, for instance, whether an entry should be added to the calendar or to a to do list.

Nifty tool (and I hope I’ll be able to provide a link later), but what I mostly remember his presentation for is that he presented it as an example of a “dirty semantic web approach”, making it sound as something diametrically opposed to the (potentially anal) endeavours of those who rely on the Semantic Web stack.

But why open up this binary opposition? You can and must have both, semantic technologies likes NLP, and open standards such as defined in the Semantic Web stack.

It’s not like one is for the ‘cool kids’ (or web 2.0 kids) and the other one for the ‘geeks’ – if anything, then I’d say that the ‘cool kids’ are probably more interested in improving the service of just their site (making the industry and software market more diverse, if there are enough of them), whereas the ‘geeks’ work towards global exchange through the definition and further development of open standards (and make sure the ‘cool kids’ don’t get trapped in their data silos).

In the end, once the Semantic Web enters maturity level, it will need both of them.

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=5013742a-3312-4d39-9e6b-1d908aa427ef)