Learn more

- Sep 8, 2008

Linked Data @ TRIPLE-I: Measuring the size of a fact, not of a fiction

The TRIPLE-I 2008 conference ended three days ago, yet there are a couple of loose ends I’d still like to tie up. First of all: Linked Data. Tom Heath was invited to give a keynote on “Humans and the Web of Data” – there are a variety of roles in which people may come across Tom and his LOD related work:

He administrates the site LinkedData.org (on behalf of the Linked Data community), he is the creator of Revyu.com (“Review anything!”), which won him the 1st prize in the Semantic Web Challenge 2007, he was a co-organizer of the Linked Data on the Web Workshop at this year’s World Wide Web conference in Beijing, and he was an interviewee in my 12 seconds definitions mission @ TRIPLE-I – see his micro definition of Linked Data in the vid below. (To learn more about Tom and the different roles he fulfils, look here).

Tom Heath explains Linked Data TRIPLE-I 2008 on 12seconds.tv

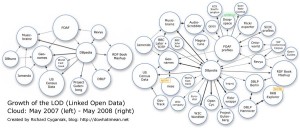

His keynote was not so much an introduction to Linked Data (I should expect that a conference like TRIPLE-I/I-Semantics would typically attract people who at least have an idea of what Linked Data is about), but rather a confirmation that the Web of Data is no longer a fiction, but a fact. One of the often cited proofs is the growth of the LOD dataset cloud over the last year, as shown in the image below (clicky for biggy, visualization created by Richard Cyganiak).

At the same time – and this was accordingly acknowledged by a later presentation given by Wolfgang Halb which had been prepared collaboratively by Tom, Wolfgang, Michael Hausenblas and Yves Raimond – it’s not just the sheer number of triples on the web that counts. Over the course of one year, the efforts of the Linked Data community (who seek to populate the web with open data, data in RDF) generated 4 billion triples – but only 3 million interlinks.

Their paper was an attempt to measure the size of the Semantic Web based on interlinks. A brief excerpt from the conclusion:

We have identified two different types of datasets, namely single- point-of-access datasets (such as DBpedia), and distributed datasets (e.g. the FOAF-o-sphere). At least for the single-point-of-access datasets it seems that automatic interlinking yields a high number of semantic links, however of rather shallow quality. Our finding was that not only the number of triples is relevant, but also how the datasets both internally and externally are interlinked. Based on this observation we will further research into other types of Semantic Web data and propose a metric for gauging it, based on the quality and quantity of the semantic links. We expect similar mechanisms (for example regarding automatic interlinking) to take place on the Semantic Web.

Another point raised by Tom in his key note was the issue of trust: According to his research, there are five parameters that have an influence on whether we trust a source or recommendation on the web or not: experience , expertise, impartiality (we don’t trust a travel agent, because we can’t help but believe that she is mainly going to recommend the offer of her ‘favourite’ clients), affinity, and track record, with experience, expertise and affinity being the most important ones. A semantic people search engine Tom presented, Hoonoh.com (currently in alpha), thus allows to weight search results according to these three criteria.

Tom’s concluding statement emphasized that Linking Data makes sense not for the sake of it, but for the sake of being at the service of humans: “A web of machine-readable data is even more interesting from a human than from a machine perspective,” for instance in search engines like Hoonoh.com

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=7f2baf69-17c3-4c2e-aa79-14de4c3e79f0)