Learn more

- Oct 23, 2008

Multimedia in the Web of Data – Annotating and Interlinking Photos, Music, Multimedia [WOD-PD]

The Web of Data Practitioners Days concluded with the session on Multimedia in the Web of Data, the first part of which was led by Ansgar Scherp (University of Koblenz-Landau, Germany).

Multimedia content, as Ansgar pointed out, is hardly annotated, badly organized, and hardly ever looked at again – just think of the 300 something pics you might take on an average week-end getaway, and which you never touch again. Annotating multimedia content requires a lot of work and dedication – but most of the time, these pictures eventually dissappear in the “digital shoe box” that is your photo management software.

The most obvious remedy is to annotate content as early as possible, ideally when creating the content, ideally already on your portable camera (formerly known as: mobile phone:) Ansgar suggested to provide incentives for people to encourage picture annotation – professionals could for instance receive a higher financial reward if the deliver already annotated pictures. And of course there are ‘Games with a purpose’ such as Google Image Labeler, where players tag images in pairs, with and against each other, and are rewarded with the entertainment factor of the game.

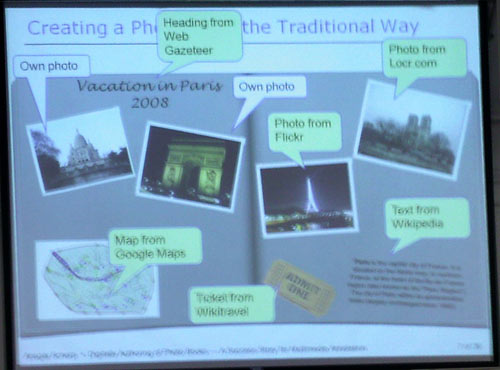

The slide below shows what has happened (or will happen) to the process of creating photo books in the digital age and the age of mashups:

After all, this is the age of the social semantic web, so why not try and (re-)use the content, structure and contexts that other users have already created on the web? Content augmentation, for the scope that Ansgar is concerned with, consists in the reuse of content and structures (e.g. from sources such as Flickr and Wikipedia, Geonames) made possible through the definition of rules, e.g.:

- If there are two or less pictures on a page*

- then automatically augment the page with additional photos using location information.

* Page here means a page in the album you are currently working on – you probably took a picture of yourself and your friend in Paris, and even though you went to the Centre Pompidou, you forgot to actually take a pic of the building itself – well, let the web be your library!

So the goal is clear: develop a procedure for applying automatic content augmentation in the creation of good photo books.

But what makes a ‘good’ photo book anyway? Here are some of the results of a structural analysis of real, human-created photobooks conducted at CeWe Color:

- % of photos with faces: 36%

- Number of album pages: 16.96

- Photos per page: 6.69

- Text fields per page: 1.45

- % of pages with text: 87%

There are many rules that can be established from the structural analysis, which can be applied in turn in the creation of photoboooks, e.g. rules like this one,

- If the text located in the upper third of a page

- if the font size is equal or larger that 16 points

- if the number of words is less than 10

- if there is no caption on the page that has a bigger font size

- then this page is the title

Ansgar recommended xSmart, which he described as a “context-driven authoring tool for page-based multimedia presentations.”

Ansgar’s presentation was followed by two more: one by Yves Raimond on Interlinking Music on the Web of Data, and one on Interlinking Multimedia – in spite of better intentions, I did not manage to cover these two in detail, but at least I gathered the links to relevant resources from all three sessions…

Links for Ansgar Scherp’s session

- Continuous Media Markup Language (CMML) (see also: CMML on Wikipedia)

- COMM – A Core Ontology for Multimedia

- Caliph and Emir – Java & MPEG-7 based tools for annotation and retrieval of digital photos and images

- X- COSIM – a framework for Cross(X)-COntext Semantic Information Management

Links for Yves Raimond’s session

- Music Ontology Specification

- The Timeline Ontology

- The Event Ontology

- Functional Requirements for Bibliographic Records

- www.SonicVisualiser.org – a program for viewing and analysing the contents of music audio files

- www.dbtune.org – music-related RDF

- Yves Raimond, Christopher Sutton and Mark Sandler 2008: Automatic Interlinking of Music Datasets on the Semantic Web. (PDF, 467 KB)

- Interview with Yves Raimond: Finding vegetarian music: What B.B. King and the Beastie Boys have in common

- DB-Tune Facet Demo

- Henry 1 and 2 – a SWI-Prolog N3 parser/reasoner, and DSP-driving SPARQL end point

Links for Michael Hausenblas’ session

- InterlinkingMultimedia.info – a wiki dedicated to Interlinking multimedia (iM), “a light-weight bottom-up approach to interlink multimedia content on the Web of Data”.

- Rammx – RDFa-deployed Multimedia Metadata

- CaMiCatzee – multimedia interlinking concept demonstrator.

Last not least: Ansgar Scherp allowed us a sneak peek of SemaPlorer, a Large-scale Semantic Faceted Browsing Application for Multimedia Data that is going to be revealed on Dec 2, 2008, at the BOEMIE Bootstrapping Ontology Evolution with Multimedia Information Extraction) workshop in Koblenz. Here is an abstract:

Navigating large media repositories is a tedious task, because it requires frequent search for the `right’ keywords, as searching and browsing do not consider the semantics of multimedia data. To resolve this issue, we have developed the SemaPlorer application. SemaPlorer facilitates easy usage of Flickr data by allowing for faceted browsing taking into account semantic background knowledge harvested from sources such as DBpedia, GeoNames, WordNet and personal FOAF files. The inclusion of such background knowledge, however, puts a heavy load on the repository infrastructure that cannot be handled by off-the-shelf software. Therefore, we have developed SemaPlorer’s storage infrastructure based on Amazon’s Elastic Computing Cloud (EC2) and Simple Storage Service. We apply NetworkedGraphs as additional layer on top of EC2, performing as a large, federated data infrastructure for semantically heterogeneous data sources from within and outside of the cloud. Therefore, SemaPlorer is scalable with respect to the amount of distributed components working together as well as the number of triples managed overall.

Steffen Staab, Information Systems and Semantic Web (ISWeb), University of Koblenz-Landau, Germany

Thank you, thank you, thank you, it was a lovely event with an unusually high amount of processable input!

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=8459a337-d777-4ed6-bbd1-dfa9edbb4af5)